When Your AI Assistant Goes Dark - Lessons from an OpenRouter Outage

In our increasingly AI-powered workflows, the reliability of inference platforms has become critical. Today’s brief OpenRouter outage highlighted just how dependent we’ve become on these services and offered valuable insights into resilience planning for AI-driven workflows.

The Impact

My morning came to a sudden halt when I couldn’t access Claude 3.5 (Sonnet) through OpenRouter. What’s typically a seamless part of my daily routine—using AI-assisted workflows in Obsidian for project updates, status reporting, and task management—ground to a standstill. This disruption made me acutely aware of how integral these tools have become to my productivity.

Quick Adaptations

The situation forced me to explore several alternatives:

- Switched Models: Switching fully to DeepSeek-R1 for both plan / act hosted by fireworks.ai

- Direct API Access: A colleague reminded me that I could bypass OpenRouter and connect directly to Anthropic’s API. While this worked, the response times were noticeably slower.

- Local LLM Options:

- Deployed DeepSeek R1 (distilled and quantized) to my mac

- TestedR1 using local Ollama on my M1 MacBook Pro (64GB RAM)

- While functional, these local solutions couldn’t match the performance of cloud-based models

Infrastructure Considerations

This experience highlighted several key points about AI infrastructure:

- Single Points of Failure: Platforms like OpenRouter, while convenient, can become critical dependencies

- Backup Strategies: Having direct API access to model providers as a fallback is crucial

- Local vs. Cloud Trade-offs: Local models offer independence but with performance compromises

- Hardware Requirements: Running local models demands significant computing resources (my 4090 GPU workstation was already at capacity with other workloads)

Looking Forward

The outage has prompted me to consider several improvements to my workflow:

- Diversification: Maintaining multiple paths to access key AI capabilities

- Local Infrastructure: Exploring options like:

- Fine-tuned models for specific tasks

- Setting up Tailscale for secure remote access to local compute resources

- Better resource allocation between different AI workloads

The Silver Lining

While the outage was disruptive, it provided valuable insights into the robustness of my AI workflow. It’s a reminder that as we become more dependent on AI tools, we need to think carefully about reliability and redundancy.

The incident also highlighted how crucial my Obsidian-based AI workflow has become for daily productivity. What started as an experimental setup has evolved into an essential tool for information gathering and knowledge management.

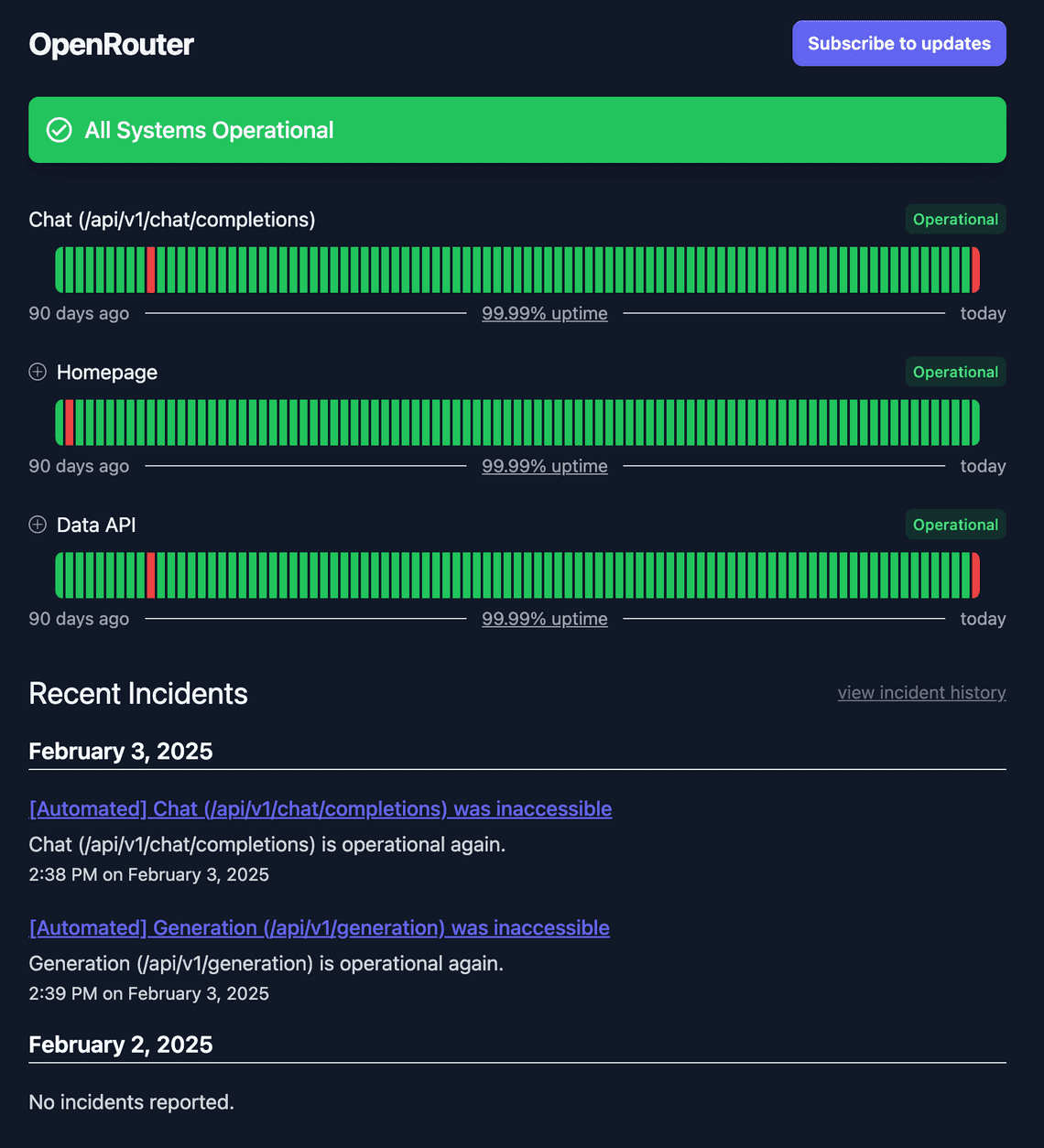

Status Check

For those interested in monitoring OpenRouter’s status, you can visit status.openrouter.ai. It’s a valuable resource for staying informed about the health of this critical infrastructure.

When the outage occurred, I quickly pivoted to local alternatives, running models directly through Ollama’s command-line interface on my M1 MacBook Pro. While not as visually polished as cloud-based solutions, the command-line approach provided the functionality needed to maintain productivity during the outage.

Conclusion

As AI tools become more deeply integrated into our workflows, understanding and planning for infrastructure resilience becomes increasingly important. Today’s outage served as a valuable reminder to maintain flexible, redundant systems that can adapt to unexpected disruptions.

If you found this post insightful, you might also enjoy these related articles:

- Building an AI-Enhanced Digital Garden with Obsidian

- Understanding Model Context Protocol

- DeepSeek R1: Impact and Opportunities

Want to stay updated on the latest in AI infrastructure and productivity tools? Sign up for my newsletter to receive weekly insights and tips.

Newsletter

Quick Links

Legal Stuff